|

A risk assessment on the current state of IoT (Internet of Things) security and what needs to be done moving forward. Follow Daniel Burns on Twitter, @DBurnsOfficial.

Written by Daniel Burns The following article is a final project completed for a Cyber Warfare class at San Diego State University. A video pitch paired with a written primer on the need for updated cyber defense policy within the United States Government. PDF Proposal: Follow Daniel Burns on Twitter, @DBurnsOfficial. Written by Daniel Burns

Follow Daniel Burns on Twitter, @DBurnsOfficial.

The future of the personal computer is within ARM's reach: the transition from x86 to ARM silicon.4/25/2021 Written by Daniel Burns

Abstract This article covers the transition of personal computers to ARM (Advanced RISC Machine) processors. It goes into detail about Apple’s current transition from Intel to their own ARM based Apple Silicon processors. How the transition to ARM and Apple’s Silicon will affect the rest of the PC industry. This paper will discuss the background on ARM CPUs, Apple’s past transition from IBM PowerPC to Intel, and the major advantages to switching to an all-new architecture. I explain how Intel’s innovation stagnation has caused the PC industry to seek alternatives while Intel changes direction. As well as trying to understand why the industry has just recently realized ARM’s full potential even though it has been around for the past 36 years. Other topics will include the details of ARM’s success in mobile devices, Apple’s A-series mobile chips always being ahead of the game, and the breakthroughs ARM keeps achieving over the traditional x86 architecture in both performance and efficiency. I attempt to answer the question of whether it is time to fully transition the rest of the PC industry to ARM based processors. Introduction With the introduction of Apple Silicon M1 based Macs, Apple has awoken the personal computer industry once again. Apple has done this by using the same CPU architecture they’ve been using for the past 10 years in their mobile devices, now brought over to the Mac desktop and laptop platforms. Their new aptly named ARM based chip called the M1 easily outperforms any legacy x86 Intel chip in three major categories: value, performance, and efficiency. Both smartphones and tablets have been using ARM based processors for the past 15 years and have continually shown major leaps in performance and efficiency with each new generation. Specifically, since the iPhone 5S in 2012, Apple’s A-series mobile chips in iPhones and iPads have consistently outperformed stock PC chips and even Qualcomm’s own Snapdragon ARM SoCs (Sims 2017). An impressive feat for Apple’s silicon team staying years ahead of the competition and proving ARMs worthiness in the modern market. Apple’s influence on the industry has caused Windows PC manufacturers to look at alternatives away from x86 Intel and AMD CPUs as they have become stagnant in year over year performance increases. The entire PC industry should learn from Apple’s success with ARM and apply it to their own roadmaps. There hasn’t been a better time than now to transition the entire PC industry to ARM processors just as the industry has successfully done with mobile devices. What is ARM and why is it used in mobile? ARM stands for Advanced RISC Machine and is in the family of Reduced Instruction Set Computing or, RISC for short. RISC describes a computer processor that is small, highly specialized, and highly optimized for a specific set of instructions. ARM is more specialized than other architectures such as x86 Intel chips or other non-ARM complex instruction set computers, CISC for short (Fulton 2020). ARM is leading the way as the up-and-coming processor found in compact devices such as smartphones, tablets, TVs, fridges, speakers, and cars. ARM is found in devices that need to be small and power efficient, as well as devices that need compact, all in one, SoCs (Systems on a Chip). Past processors consisted of a single processing unit on one chip. In contrast, modern ARM processors are SoCs. This means they consist of memory, interfaces, radios, and extra core designs. These extra cores can serve specific tasks, such as dedicated machine learning cores found in Apple’s A-series mobile device chips. Mobile devices have used ARM specifically for its performance per watt. This is a benefit for laptop users because lower wattage enables better battery life. Because of its massive success and efficiency in mobile devices it is probable that the PC industry will look forward to finding the next best avenue in processor technology (Regidi 2020). x86’s Background ARM processors are becoming more logical for use in laptops and desktops as they are thinner and smaller than ever before while end users become more mobile. Blem, a researcher at the University of Wisconsin, sums up why ARM is the next best option. “Because of the vast difference in how ARM handles instructions compared to x86, the efficiency, power draw, and speed gained from such a small package, performance per watt, in essence, ARM is the ideal choice for mobile devices” (Blem 2013). The x86 architecture has been around since 1978 and was developed by Intel for a 16-bit microprocessor called the 8086. It was revolutionary for its time because it allowed the use of higher capacity memory. This is thanks to memory segmentation, compared to their previous 8-bit architecture. The name x86 comes from the 86 in the name 8086. This naming convention has continued even as the industry recently transitioned from 32-bit to 64-bit computing in the last 10 years. Today x86 is referred to the instruction set both Intel and AMD still use today. For the past 4 years both Intel and AMD, more so with Intel, have been stuck in a stagnate hold battle over their current generation of processors. They have been running hot and drawing too much power, while not improving drastically generation over generation unlike in past iterations. Consider it a type of Moore’s Law roadblock, facing head on with heat constrictions due to an aging architecture. Intel’s Stagnate Specifically, Intel has been stuck on the same 14nm (nanometer) chip size they’ve been using for the past 9 years. Aside from pushing more cores into it and increasing the base clock and wattage, the performance margins on the aging fabrication are getting slimmer and slimmer. Management at Intel is also to blame as the former CEO Bob Swan was recently replaced by former VMware CEO Pat Gelsinger. Swan was more into management and pushing emerging technology buzz categories such as AI (Artificial Intelligence) and 5G over focusing on what Intel does best, chip development. With Gelsinger’s engineering experience, hopefully Intel can get back to their roots. Apple and Qualcomm are on a 7nm process, beating most of Intel and AMDs fastest CPUs (Blem 2013). Apple is looking into 5nm as we speak and has most likely been on their well thought out roadmap for years. Apple and Qualcomm’s smaller and more power efficient ARM chips have outperformed the older x86 architecture in mobile performance. Due to this Lei points out that “ARM-based mobile smart devices are becoming more and more ubiquitous and the preferred platform for users’ daily computing needs is shifting from traditional desktop to mobile smart devices” (Lei Xu 2012). The smartphone industry has proven ARM’s worthiness in our mobile devices. This makes it highly likely that consumer desktops and laptops will adopt ARM as well. Apple’s Transition to the M1 Apple’s transition to M1 will not be easy as it requires at least a full 2 years of transition time period. A ramp in production is needed to fully achieve independence from a tech giant such as Intel or AMD. Apple needs the full support of developers worldwide to back their transition. This will ensure that the end user’s favorite apps work flawlessly or even better than they did on x86 Macs. Apple’s biggest advantage to using their own ARM-based processor is having control of the full stack. Meaning Apple themselves solely design, produce, and ship every single component in a Mac giving them complete and tight control over how their devices perform and how long they should be supported for. Apple has a very strong connection with the developer community, more so than Google and Microsoft with their respective development platforms. On the iOS and iPadOS side, app developers have XCode to build apps with a massive ensemble of tools and kits to help make the app building experience as seamless as possible. This tight-knit process allows a level of integration between software and hardware that is so good, even Google and Microsoft still haven’t been able to fully replicate it. Apple’s Previous Experience XCode is Apple’s all in one IDE (integrated development environment) used to develop software for macOS, iOS, iPadOS, watchOS, and tvOS. This isn’t the first time Apple has transitioned from one CPU architecture to another. From 1992 to 2006, Apple ran their own set of processors called the PowerPC (Dignan 2020). It was built out of an alliance between Apple, IBM, and Motorola. The PowerPC chip was a RISC based ISA (instruction set architecture) that, at the time of its creation, was a powerful chip. Then, in 2006, Apple began its transition to Intel for one big reason, efficiency. Specifically, efficiency in performance and heat output. Intel’s Core architecture compared to the PowerPC G5 at the time showed a huge difference in heat output. The PowerPC G5 required a massive cooling system to deal with the amount of heat it dissipated. That’s one of the reasons why a G5 powered laptop from Apple never came to fruition. During one brief period before Apple switched to Intel, Apple produced their own a liquid cooled PowerMac G5 desktop due to its tendency to overheat. During this transition, Apple tried to make it easier on developers and users by creating Rosetta. Rosetta was a dynamic binary translation program that allowed for the use and operation of PowerPC only apps on Intel machines. This helped bridge the time gap while developers made their apps work with Intel Macs. Apple’s Advantage Apple is now once again asking developers to transition their apps, but this time from Intel to Apple Silicon. They’ve made it easier in 2020 with Rosetta 2, a real time instruction translator to allow x86 Intel apps to run on Apple Silicon based Macs. Fulton explains how Rosetta 2 works in macOS: “For MacOS 11 to continue to run software compiled for Intel processors, the new Apple system will run a kind of "just-in-time" instruction translator called Rosetta 2. Rather than run an old MacOS image in a virtual machine, the new OS will run a live x86 machine code translator that re-fashions x86 code into what Apple now calls Universal 2 binary code -- an intermediate-level code that can still be made to run on older Intel-based Macs -- in real-time. That code will run in what sources outside of Apple call an "emulator," but which isn't really an emulator in that it doesn't simulate the execution of code in an actual, physical machine (there is no "Universal 2" chip)" (Fulton 2020). Rosetta 2 has proven to be incredible in its ability to translate most x86 written Mac apps. It translates in real time to rapidly execute on future ARM based Macs. In a YouTube video, Quinn Nelson, from a channel called Snazzy Labs, demonstrated the power of the M1 and its translation using Rosetta 2. Quinn was running a Windows app inside of Wine which translates Windows binary to run on Intel Mac Binary, then Rosetta 2 takes place under Wine’s translation and translates Intel Binary to Apple Silicon. The app or game in this case was running flawlessly, even faster than on a lower specced windows machine (Nelson 2020). This example shows major the significance Rosetta 2 and the M1’s ability to run non-native applications virtually. How Does the M1 Compare? With their A-Series mobile chips Apple has benchmarked significantly higher than Qualcomm in performance measures while also edging closer to being faster than what Intel has to offer (Sims 2017). This is present in synthetic benchmarks, lab stress tests, and real-world usage. In the past two years we have seen that Apple’s performance has exceeded the performance offered by Intel and AMD. For example, the latest iPhone 12 Pro uses Apple’s A14 Bionic SoC, a 6 Core, 3.0 GHz processor paired with 6 GB of ram and it runs a Geekbench score of 3948 on multi core and 1587 on single core. Compare that to a quad core 4.0 GHz Intel Core i7 4790k processor from 2014 with a multi core score of 3945 and 1058 on single (Geekbench.com Data Charts). That’s comparing a full-fledged and unlocked desktop CPU which is cooled by a heatsink to a fan-less mobile chip found inside of a smartphone. This is revolutionary because of the thermal constraints on the A14 and that is able to outperform a full-fledged desktop processor. Sims sums up why Apple’s success has been so consistent: "There is no denying that Apple has a world class CPU design team that has consistently produced the best SoCs in the world over the last few years. Apple’s success isn’t magic. It is a result of excellent engineering, a good lead time over its competitors, and the luxury of making SoCs with lots of silicon for one or two products at a time" (Sims 2017). In November of 2020 at Apple’s “One more thing” event, Apple dropped the M1 powered 13-inch MacBook Air, 13-inch MacBook Pro, and Mac mini desktop. Out of the 3, only two of them are in fact air cooled with an actual fan. The MacBook Air ironically is cooled by a single metal heat shield. The Air does not have a traditional heatsink, rather a single metal pad for heat dissipation (Goldheart 2020). Casey details the performance increase over previous generation machines with an Intel processor: "Both M1 MacBook’s are rated for substantial leaps over their predecessors. Specifically, Apple notes the M1 MacBook Air is 3.5x as fast as the most-recent Intel MacBook Air, and that the M1-based MacBook Pro is 2.8x as fast as its predecessor. That's the kind of performance that could topple the Intel Core i9 version of the 16-inch MacBook Pro (which isn't one of the first round of Macs getting ported to M1 chips)" (Casey 2021). The M1 is an 8 core CPU that’s split between 4 high performance cores and 4 high efficiency cores paired with either 8 or 16 GB of DDR4 memory integrated into the M1 SoC. This allows memory access to be quicker than separated memory chips found in previous models. The current M1 equipped machines all score relatively the same, which is expected given that they all use the same M1 processor. On Geekbench the M1 found in the new Macs have an average score of 7681 on multicore and 1740 on single core performance. That puts it right there next to the $10,000 Mac Pro with its 8-core server class Xeon workstation CPU that has a multicore sore of 7959. The M1 also outperforms the $3,000 8-core, Intel Core i9 16-inch MacBook Pro with its multicore score of 6850 (Geekbench.com Score Charts). This is the kind of performance advantage you can expect to see across the board when every Mac switches to ARM. Given the history of the M1 we can anticipate that the upcoming chips found in the new iMac and Mac Pro refresh coming shortly this year will be exponential. Apple has achieved an unheard of 20-hour battery life on the M1 13-inch MacBook Pro. As confirmed in a battery life test by Linus Tech Tips, the M1 MacBook Pro lasted for just shy above 20 hours compared to an Intel MacBook Pro 13 inch at 11 hours, Intel powered Dell XPS 13 inch and MacBook Air 13 inch at 12 hours, then an HP Envy x360 at 13 hours (Sebastian 2020). The MacBook Pro has the same sized battery in both Intel and M1 variants, yet the M1 MacBook Pro lasted for an additional 9 hours due to the M1’s power efficiency. What has Microsoft done with ARM in the past? It seems as if Apple has its roadmap planned for its successful transition to their own ARM architecture. However, what does that mean for Windows? Microsoft has had its fair share of failures in the past and its attempt to run windows on an ARM processor was not good enough entice consumers to switch. In 2012 Microsoft partnered with Qualcomm to create an ARM powered Surface 2-in-1 tablet. Torres, a writer for Slash Gear, examined Microsoft’s past experiences involved with ARM devices: "Ever since the first Surface and Lumia 2520, Microsoft and Qualcomm have been working together to bring Windows to devices that promise long battery life, always on and always connected computing, and lightweight productivity. Save for the short-lived Windows Phone “spinoff”, it hasn’t all been that successful. And as they say, those who don’t learn from the past are bound to repeat it" (Torres 2019). Windows 10 at the time barely had the ability to translate any apps successfully from an Intel binary to their ARM based binary. Traditionally low powered mobile PCs were still x86 based and utilized a slower processor such as an underclocked Intel Atom or Celeron CPU. ARM on the other hand achieves performance and efficiency with hardly any tradeoffs. Apple has been putting in the resources to develop on ARM for over 11 years, whereas Microsoft has only been developing for ARM during the last 4 years. Torres also elaborates on Microsoft’s failures to successfully integrate Windows on an ARM based platform: "Windows’ sojourn outside the x86 world mostly failed on three accounts: software, performance, and expectations. Windows RT launched with an app store that was practically a ghost town and although the Microsoft Store now has more residents, it’s still a far cry from the populous Android and iOS towns. Microsoft sought to remedy that by introducing a compatibility layer that would make win32 software run on ARM hardware. Even on the newest Surface Pro X, performance on that front is inconsistent at best" (Torres 2019). Microsoft needs to look at Apple’s approach to ARM in order to successfully transition in the future. Learning from Apple’s Success The key to Apple’s success is its tight-knit community of developers. Based off Apple’s success, Microsoft should create new and improved developer tools to help transition, translate, and build apps that run on ARM. Their current lineup of ARM supported apps in the Windows App Store is minimal and as Torres calls it, “a ghost town”. Compare that to Apple’s project Catalyst and XCode tools that allows any iOS app to natively run on macOS with just a click of a checkbox. Apple has millions of apps on the App Store that, with the use of Rosetta 2, fills the transition gap until developers make Mac specific apps for M1. Microsoft needs the support and interest of developers to build a platform that successfully runs on ARM processors. Windows 10 itself has gotten better, especially on the Qualcomm powered Surface Pro X, but it still falls way behind with even the most mundane of tasks. The Surface line is behind in performance and efficiency when compared to the iPad Pro, which does not even run a full-fledged desktop operating system. Bohn, notable tech journalist from the Verge, explains how a tight-knit and clear roadmap is necessary for success: "As it so often has over the past decade, Windows offers a roadmap of where things could go awry for the Mac. Windows on ARM still has unacceptable compromises for most users when it comes to software compatibility and expectations. I say this as a person who walked into those compromises’ eyes wide open, buying a Surface Pro X. I essentially use it as a glorified Chromebook and it’s very good at being that thing, but there’s no way Apple would want that for its Mac users” (Bohn 2020). It is like building an electric car without any charging infrastructure to support it. If Microsoft can fully commit itself to focus on a transition plan to ARM, then they need to commit to a well thought out roadmap to dig themselves out of their current rut. The most crucial step to a successful transition is engaging with developers. Engaging with the developer community with proper tools and support is key to a successful transition as Apple has demonstrated before. For years now big cloud computing companies such as AWS have had servers successfully running ARM based CPUs running calculations and storage farms. If Microsoft wants to compete with macOS and Linux machines in the server industry, then they may need to shift their focus from Office products and start to expand their Windows development team. Bohn points this out by saying: "Windows on ARM simply isn’t getting the developer attention and support that standard Windows gets, both within Microsoft and outside it. It was the same with many of Microsoft’s other Windows gambits — simply witness how many times it has rebooted its app framework strategy – a complete mess" (Bohn 2020). Microsoft’s strategy is to create a universal operating system for all platforms causing development to be spread thin as opposed to Apple’s strategy where they focus on a singular platform. Intel’s Strategy and Moving Forward In a press conference Intel recently announced that they will be adopting a new business strategy called IDM 2.0, a $20 billion dollar investment, allowing them to open up its new production facilities in Arizona to produce custom chips for other companies. “As reported by Business Wire, Gelsinger today shared his idea for the evolution of Intel’s business model, which he calls “Integrated Device Manufacturing” or “IDM 2.0.” Intel wants to expand its operations to not only expand the development of its own chips, but also to build chips for third-party vendors” (Esposito 2021). This allows Intel to make money from both manufacturing their own chips as well as building other custom chips. Esposito goes on to detail, “As competition has been increasing with AMD and other companies like Apple, which recently began transitioning from Intel processors to its own chips in Macs, Intel now wants to take a step further and open up its production capacity to build and deliver custom chips for third parties. The company wants to use its facilities in the US and Europe to meet the global demand for semiconductor manufacturing” (Esposito 2021). Intel understands that if they wanted to remain relevant in this upcoming market transition, even if they don’t yet have their own ARM chip, they must offer a solution to compete with Apple and Nvidia. Gelsinger’s announcement specifically states that Intel will work with anyone to build processors. Gelsinger states: “As part of Intel’s Foundry Services, the company is announcing that it will work with customers to build SoCs with x86, Arm, and RISC-V cores, as well as leveraging Intel’s IP portfolio of core design and packaging technologies.” Gelsinger mentions specifically that Intel will work with RISC-V and ARM architectures to build custom chips which is exactly what Apple uses for its custom Apple Silicon designed processors. He elaborated on their interest in working with Apple during the Q&A session of the press conference. “Gelsinger mentioned that Intel is currently working with partners, including Amazon, Cisco, IBM, and Microsoft. But he pushed a bit further during a Q&A session with press, saying that he’s even pursuing Apple’s business” (Esposito 2021). This recent change in direction reaffirms the threat that ARM poses over the traditional x86 platform. By offering custom ARM-based processors to third parties, Intel is able to develop ARM chips for others, before they themselves come up with and solely produce their own ARM based processor. Conclusion Apple’s success on mobile processors have proven to be a modern marvel in how we compute today. Their chip architecture development team has delivered and consistently been ahead of market expectations every single year. Their up-and-coming desktop class processors have shown strength that could influence the industry into transitioning to ARM processors. The transition comes down to the developer community and their interest, combined with the proper developer tools to support new platforms, to run on ARM based PCs. Microsoft has the ability push the industry forward into that transition if they focus their efforts and stick to a single game plan. With Apple’s leadership in pushing ARM to the consumer market, end users will get to experience a whole new era of computing possibilities. All thanks to a singular architecture change that brings performance, value, and efficiency of the likes never seen before. Thanks to this new architecture there has not been a better time than now to transition the entire PC industry to ARM processors, just as the industry has successfully done with mobile devices. References Blem, E., Menon, J., & Sankaralingam, K. (2013). A Detailed Analysis of Contemporary ARM and x86 Architectures. Minds at Wisconsin. https://minds.wisconsin.edu/bitstream/handle/1793/64923/Blem%20tech%20report.pdf?sequence=1&isAllowed=y Bohn, D. (2020, June 10). What Windows can teach the Mac about the switch to ARM processors. The Verge. https://www.theverge.com/2020/6/10/21285866/mac-arm-processors-windows-lessons-transition-coexist Casey, H. (2021, January 06). Apple M1 CHIP SPECS, release date, and how it compares to Intel. Tom’s Guide. https://www.tomsguide.com/news/apple-m1-chip-everything-you-need-to-know-about-apple-silicon-macs Dignan, L. (2020, June 09). Apple to move Mac to Arm CPUS: What you need to know. ZDNET. https://www.zdnet.com/article/apple-to-move-mac-to-arm-cpus-what-you-need-to-know/ Dilger, Daniel Eran. Apple Silicon M1 13-inch MacBook Pro review - unprecedented power and battery for the money. Apple Insider. https://appleinsider.com/articles/20/11/17/apple-silicon-m1-13-inch-macbook-pro-review---unprecedented-power-and-battery-for-the-money Esposito, Filipe (2021, March 24). Intel to build ARM chips for other companies as part of its new business strategy.9To5Mac. https://9to5mac.com/2021/03/23/intel-to-build-arm-chips-for-other-companies-as-part-of-its-new-business-strategy/ Fulton III, Scott. (2020, September 14). Arm processors: Everything you need to know. ZDNET. https://www.zdnet.com/article/introducing-the-arm-processor-again-what-you-should-know-about-it-now/ Goldheart, S. (2020, November 19). M1 MacBook Teardowns: Something old, something new. iFixit. https://www.ifixit.com/News/46884/m1-macbook-teardowns-something-old-something-new Lei Xu, Zonghui. (2012, September 01). The study and evaluation of arm-based MOBILE virtualization. Sage Journals. https://journals.sagepub.com/doi/10.1155/2015/310308 Lovejoy, B. (2020, July 13). Switch to ARM won't just BE Macs, but better Windows PCs too. 9To5Mac. https://9to5mac.com/2020/07/13/switch-to-arm/ Nelson, Q. (Director). (2020, December 12). Apple M1: Much more than hardware [Video]. YouTube. https://www.youtube.com/watch?v=ff98l3P66i8 Regidi, Anirudh. (2020, November 14). The future of PCs is in Apple's arms: Breaking down the Apple-Intel Breakup. Tech2. https://www.firstpost.com/tech/news-analysis/the-future-of-pcs-is-in-apples-arms-now-heres-why-8554331.html Sebastian, Linus. (2020, December 26) Apple made a BIG mistake – M1 MacBooks Review [Video]. YouTube. https://www.youtube.com/watch?v=KE-hrWTgDjk Sims, Gary. (2017, October 02). Why are Apple's chips faster than Qualcomm's? - GARY EXPLAINS. Android Authority. https://www.androidauthority.com/why-are-apples-chips-faster-than-qualcomms-gary-explains-802738/ Torres, J. (2019, December 25). Windows on arm needs to tell a different story. Slash Gear. https://www.slashgear.com/qualcomms-and-microsofts-windows-on-arm-needs-to-tell-a-different-story-25604295/ Follow Daniel Burns on Twitter, @DBurnsOfficial.

Written By Daniel Burns

Follow Daniel Burns on Twitter, @DBurnsOfficial.

Written By Daniel Burns

Written by Daniel Burns

Written by Daniel Burns

Written by Daniel Burns

Written by Daniel Burns

Written by Daniel Burns

Written By Daniel Burns

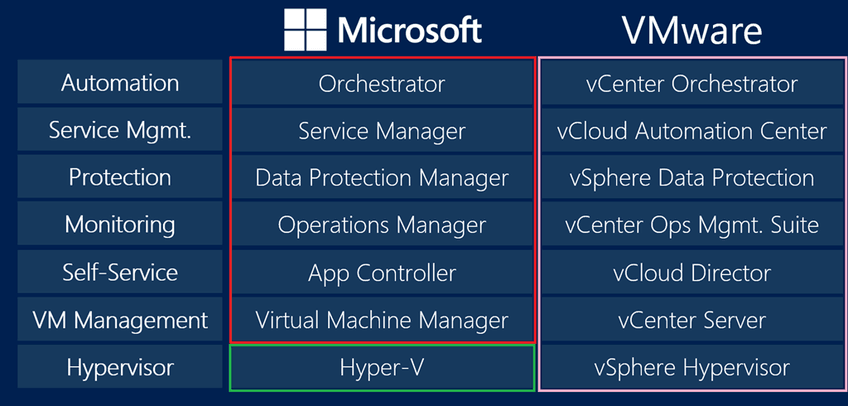

Written By Daniel Burns  Virtualization has been around since the 1960s as a method of logically dividing system resources provided by the mainframe computers between different applications (Virtualization - Wiki). Today we use it to run applications, operating systems, and even virtual hardware within another system. Hyper-V is virtualization software developed by Microsoft to run on Windows and Windows Server platforms. It can not only virtualize operating systems but also entire hardware components, such as hard drives and network switches. Unlike Fusion and VirtualBox, Hyper-V is not limited to the user’s device. You can use it for server virtualization, too. (Iva 2018). Hyper-V is a Windows Server add on that allows you to run multiple virtual servers on one dedicated server. It is also available for Windows 10 as a way to run almost any operating system on top of Windows as well. To enable Hyper-V on your Windows device, you’ll need to be running a 64-bit version of your operating system with a minimum of 4GB of ram (Iva 2018). Although Microsoft recommends a minimum of 4GB of ram, it is better to have 8GB or more because it's exactly that, a minimum. Any virtual environment will need resources such as CPU cores, ram, and storage space. The the more you can throw at it without hindering your base operating system, the better your virtual environment will run. So why might you use a virtual machine? Well you might have an application that simply can’t run on your current operating system because it is either too old or incompatible. Instead of partitioning your drive to install a second operating system and boot into it, Virtualization allows you to run that second OS inside of a window on your current OS. Pretty neat huh? For example, I have an older version of a CAD program that no longer exists but it can only run on Windows XP. I have specific files for that program that need manipulating or converting but I can’t run it on my Windows 10 computer. So using Hyper-V or any other program like VMware Fusion or Oracle’s VirtualBox, I can load up Windows XP and get my work done. All without leaving my desktop and messing with its boot configuration. You can run any operating system from Windows, Linux, and even macOS on a PC with some custom boot loader configurations. Hyper-V comes preinstalled with Windows 10 so you don’t have to pay for or download anything extra. To use it you’ll have to go to Control Panel, click on “uninstall a program,” then select “turn Windows features on or off” on the left side. Next scroll down to Hyper-V and check the box to enable it and select OK. You can also enable Hyper-V from Command Line using this command: “DISM /Online /Enable Feature /All /FeatureName:Microsoft-Hyper-V.” After its turned on you can now run it from search and setup your virtual environment in accordance to your needs. Number of CPU cores, ram allotment, storage size and where it's stored, and so much more advanced settings to configure such as sound, networking and specific use cases etc. Not only can you use it for running older software, you can do so many task specific things to fit your needs with virtualization and Hyper-V. Whether you experiment with other operating systems and or unreleased work in progress programs. Hyper-V makes it very easy to create and remove different operating systems to your hearts content (Microsoft). Test software on multiple operating systems using multiple virtual machines. With Hyper-V, you can run them all on a single desktop or laptop computer. These virtual machines can be exported and then imported into any other Hyper-V system, including Azure (Microsoft).

Now Microsoft’s Hyper-V isn’t the only virtual machine software on the market today. They have major competition from VMware’s paid software, VMware Fusion and vSphere and some from Oracle’s free program, VirtualBox. Let's focus directly on VMware as they compete directly to each other. Virtualization remains one of the hottest trends in business IT (Collins 2019). VMware offers a few more advantages over Hyper-V other than its major disadvantage of being a paid program. VMware has been in the server virtualization market for far longer than Microsoft. The company has been in business since 1998 and shipped its first product (VMware® Workstation) the following year. VMware released its first true server product (ESX® Server) in 2002 (Posey 2017). Hyper-V was only made available in 2008 when Microsoft announced it alongside Windows Server 2008 and it only made its was to the consumer in 2012 with the release of Windows 8. So VMware has more maturity and experience when it comes to background. Both offer almost identical feature sets and their own learning curves so its up to you or your company to decide what is best suited for your needs (Posey 2017). VMware is a bit more consumer friendly with its user interface and some novice users may find it easier to work. Both should be around for a long time and support shouldn't be considered a top issue to contend to when considering one over the other. VMware’s core hypervisor is less expensive than Microsoft’s. However, Microsoft’s management server cost less than VMware's (Posey 2017). So when everything is considered for your own needs, it really comes down to what is best suited for you. As a consumer, you can’t go wrong with Hyper-V being included with Windows and readily available. Virtualization software has come a long way and the dream of having one physical computer run multiple under one roof is becoming easier, faster, and cheaper to do. Next up, cloud virtual computing on every connected device!… someday. Follow Daniel Burns on Twitter, @DBurnsOfficial References: Docter, Q. (2018). CompTIA Security+: study guide. Hoboken, NJ: Sybex. Hyper-V. (2020, February 28). Retrieved from https://en.wikipedia.org/wiki/Hyper-V Scooley. (n.d.). Introduction to Hyper-V on Windows 10. Retrieved from https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/about/ Scooley. (n.d.). Enable Hyper-V on Windows 10. Retrieved from https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/quick-start/enable-hyper-v Iva, Steve, Z. I. T. (2018, October 10). What Is Hyper-V & How Do You Use It? A Beginner's Guide. Retrieved from https://www.cloudwards.net/hyper-v/ Shinder, D. (2017, June 23). 10 things you should know about Hyper-V. Retrieved from https://www.techrepublic.com/blog/10-things/10-things-you-should-know-about-hyper-v/ Collins, Tom. Hyper-V vs. VMware: Which Is Best? (2019, December 19). Retrieved from https://www.atlantech.net/blog/hyper-v-vs.-vmware-which-is-best Posey, B. (2019, October 7). Virtual Infrastructures: Hyper-V vs VMware. Retrieved from https://www.solarwindsmsp.com/blog/virtual-infrastructures-hyper-v-vs-vmware “Watch Out Android Wear!”

One Week with the Apple Watch. After having the Apple Watch for over a week now I have found it to be a very enjoyable experience. From being in the classroom, glancing at notifications, to being in PE or out for a run, logging my activity. Its actually been a very useful tool. This new product comes from the post Steve Jobs era with new CEO, Tim Cook at the helm, heading in the right direction for Apple’s future. The interface is of something new to iOS users. It could be a slight learning curve, at first, but to navigate the new interface Apple has made it easier by making things fluid and familiar to its bigger brother operation system, iOS. It just takes getting used to and after less than a day having it, I got the hang of it. The heart rate tracking is quite fun actually. Its pretty cool to see your heart rate thought the week and with Apple’s new HealthKit, communication with your doctor is easier than ever. The new ability to submit and or show your weekly and daily blood pressure and heart rate to your doctor is amazing. No more high blood pressure spikes at the doctors office. Now you have a more accurate outlook on your health. Sleep tracking is kinda neat too. Its interesting to see your lowest heart rate while sleeping and an accurate time of your sleep. The battery is as expected for a first generation device. It just depends on what you do with it in that day. If you do a lot including workouts, notifications, and checking time, you get the expected 18+ hours of use. I’ve got it to last about 2 days which is great compared to the 18 hours as told by Apple. Charging the watch is very cool. You just set it on its small puck sized charger and it wirelessly, inductively charges. It takes only 2 hours to get a full charge with the 5W adapter that comes with it and only 1 hour with Apple’s 12W adapter (sold separately). The Watch case and materials itself a surprisingly very durable. It still feels like something Apple didn't make and thats not a bad thing. It feels very premium on the wrist which nails the whole point Jonny Ive made when designing this watch. I have the Apple Watch Sport, 42 mm space gray which has the aluminum body and iON-X glass. For example, in wood shop at school, I hit the center of the screen on a metal machine by accident and it didn't even scratch it. I was astonished at its durability. The higher end model;s have the Sapphire Crystal screen which is expected to be even better. So no worries here. Already there are tons of apps for the watch in the app store. Surpassing Android Wear in just one day of available apps. So no problems finding any app. Android needs to step up its game if they want to compete. All in all this is a great new product that has the potential to live on. The Apple Watch is a game changer to the industry that will set new standards for other companies to follow. Whether you get the current Apple Watch, which is till on backorder after 4 million+ orders, or wait for the second generation. I assure you that you won’t be sorry with your purchase. I highly recommend the Apple Watch. Follow Daniel Burns on Twitter, @DBurnsOfficial |

Meet the WriterDaniel Burns is the co-owner of Adium Technologies and has been in the IT buisness since 2014. Currently pursing a masters degree in Cybersecurity Management at San Diego State University. He occasionally shares his rant on technology and strives to help make the use of technology easier for the everyday user. You can follow him on Twitter for his latest likes, rants, and opinions. Past Posts

November 2022

|

RSS Feed

RSS Feed